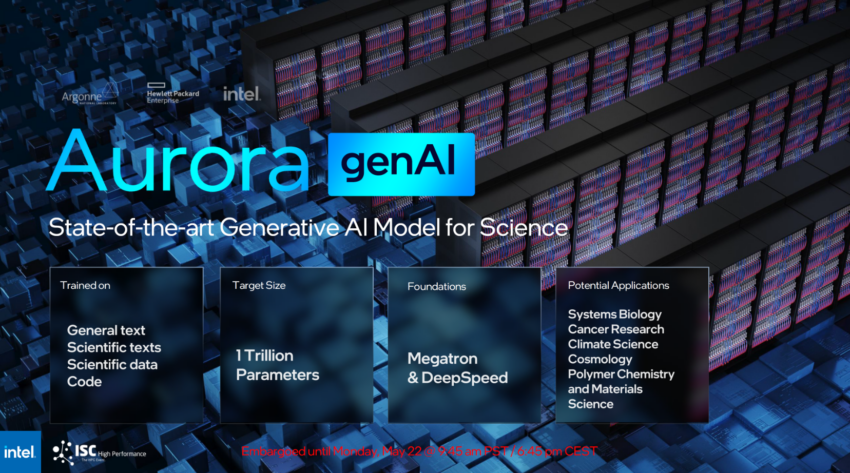

In addition to today’s announcement of the Aurora Supercomputer, Intel announced the Aurora genAI, a brand new Generative AI Model for science.

The Aurora supercomputer with 2 Exaflops will be used to power the Aurora genAI artificial intelligence model. The Aurora genAI model will be trained on General text, Scientific text, Scientific data, and domain-specific codes, as announced during today’s ISC23 keynote.

Intel Unveils Aurora genAI “Generative AI Model” For Science, Up To 1 Trillion Parameters

This will be a completely science-based generative AI model with the following potential applications:

- Systems Biology

- Cancer Research

- Climate Science

- Cosmology

- Polymer Chemistry and Materials

- Science

Megatron and DeepSpeed are the cornerstones of the Intel Aurora genAI architecture. Importantly, the new model’s target size is 1 trillion parameters. In contrast, the target size for the free and public variants of ChatGPT is only 175 million. This is a 5.7-fold increase in the number of parameters.

These generative AI models for science will be trained on general text, code, scientific literature, and structured scientific data from the fields of biology, chemistry, materials science, physics, and medicine, among others.

The resulting models (with as many as 1 trillion parameters) will be used in a variety of scientific applications, ranging from the design of molecules and materials to the synthesis of knowledge from millions of sources in order to suggest new and intriguing experiments in systems biology, polymer chemistry and energy materials, climate science, and cosmology.

In addition, the model will be utilized to expedite the identification of biological processes related to cancer and other diseases and to suggest drug design targets.